Testing your site content, either via MVTs or simple A/B testing, is a practice that allows your businesses to assess the performance of proposed changes to their site design against each other. At its heart, testing is really about defining what you know and what you do not. Below, we cover testing best practices to help you make the decisions that increase revenue for your company.

Reasons to Test

Just about any open question is a reason to test. Testing’s real value, however, is far more than simply settling disagreement about the color of a Buy Now button. Testing puts your customers’ experience at the center of your decision making. Rather than rely on gut intuition or the most fiercely held opinion on the team, testing allows your company to ask your customers to tell you the answer that works for them. That gives you the ability to make business decisions based on hard data from real customer experiences. Careful testing is an essential part of your ongoing optimization efforts.

Resistance to Testing

Given the granular nature of user data now available to any online company, setting up and running a test is not technically challenging. Resistance to testing is often based on a lack of will by decision makers in the company. Most reluctance to testing comes from a fear of losing revenue. Especially at the ends of quarters, or when other pressures are mounting for a company, it can be difficult to have the confidence to find your most optimized cart. Resistance can also come from a general fear of the unknown, from the attitudes of the highest paid person in the room or from previous testing experiences. Once the buy-in exists, however, the process for running a test is simple.

Testing Methodology

Strategic Goal

Todd Garcia, Senior Account Executive at cleverbridge, walked us through the process for establishing a test.

He recommends you begin the testing process by defining your strategic goal. For instance, Garcia once ran a test where the strategic goal was to raise the revenue associated with a particular product. While that goal was clear, there was more than one way to achieve it. Establishing this test required some discussion about which changes to implement. As he discussed the test with the software merchant, they began to flesh out a hypothesis for the test.

Hypothesis

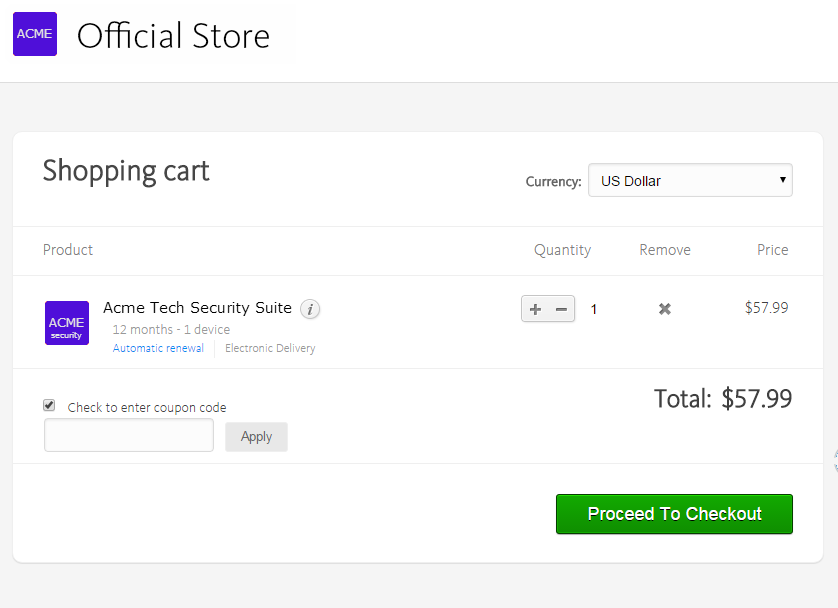

Garcia noted that, “It’s important to define a hypothesis that is testable and results can easily be defined through data analysis. Meaning, your hypothesis should be based on realistic KPIs for that specific test.” In this case, the hypothesis was that they could raise the average order value of the product by removing the field to enter a coupon code in a shopping cart.

Test

In order to have meaningful results, do not simply implement your change and compare it to historical data. Garcia explained, “Customers act differently in different times of the year, different days of the week, different hours of the day, etc., … so it’s important to test your variations in real time.” Your original design becomes your control design. The control shows you how your current design performs during the same time period and under the same conditions to your test design. The test design is implemented alongside your control, and customers are diverted to one design or the other.

Assess

How did the two variations of your page perform? Did one do better? How well did your hypothesis live up to the results? In this case, it turned out the hypothesis was incorrect. Average order value did not increase or decrease in any significant way with the removal of the coupon field. This negative result did, however, have a surprising result.

At the start of the test, the conversion rate was expected to remain constant. Remarkably, the conversion rate increased by 10 percent. The results indicated something very specific about customer behavior when presented with the option to enter a coupon code. More customers completed their purchase when they were not prompted to enter a code.

We can only guess what was happening in the minds of the customers, but it could be that some were abandoning their cart to search for coupon codes and not returning. Perhaps they did not find the code and gave up. Perhaps they found a discount from a reseller, and were not buying the product directly from the publisher. Or perhaps they were finding a different product altogether. In any case, removing the obstacle to converting kept a greater number of customers engaged with this client’s product.

Outcomes

In discussing the outcomes of the test, Garcia noted, “My hypothesis was proven wrong, which is totally fine because something even cooler came out of it. Online retailers include the coupon code option because they would rather have customers come back with a coupon than not buy at all. But based on the results of the test, they’re just not coming back. The test teaches us that if customers are in your cart, they want to buy. So don’t push them away.”

Keystone

Good business intuition is essential to any successful enterprise, but those gut feelings can sometimes prove incorrect. The potential risk of running tests is far outweighed by the value of confirming or refute your hunches with real data. Working with a solid methodology ensures your tests are meaningful, and your decisions based on the outcomes have a far better chance of helping you achieve your strategic goals.

Learn more about how to increase revenue with our Ultimate Guide to Conversion Rate Optimization.